From NVIDIA’s 800Vdc power architecture to the open Deschutes CDU standard, this year’s OCP Summit highlighted breakthroughs across the full spectrum of power, cooling, and rack technologies shaping AI data centers.

The Open Compute Project (OCP), founded in 2011 to promote open, efficient data center design, has become the leading forum shaping AI‑era infrastructure. Now a focal point for next‑generation discussions on power, cooling, and rack and server architecture, its annual Global Summit was held last week in San Jose, Calif., drawing more than 10,000 participants. The non‑profit’s reach continues to expand through new subprojects that broaden its scope across data center systems. The clearest signal of its growing influence came with the announcement that NVIDIA would join its board—a move underscoring how even the industry’s pace‑setter sees value in aligning more closely with the organization.

Among the most pivotal technological developments, NVIDIA provided deeper detail on its 800Vdc power distribution architecture for data centers, adding substance to a disruptive concept first hinted at in a May blog post. This triggered a wave of announcements from power and component suppliers: Vertiv previewed new products expected next year; Eaton introduced a new reference design; Flex expanded its AI infrastructure platform; Schneider Electric unveiled an 800Vdc sidecar rack; ABB announced new DC power products leveraging its solid‑state expertise; Legrand deepened its focus on OCP‑based power and rack solutions; and Texas Instruments introduced new power management chips.

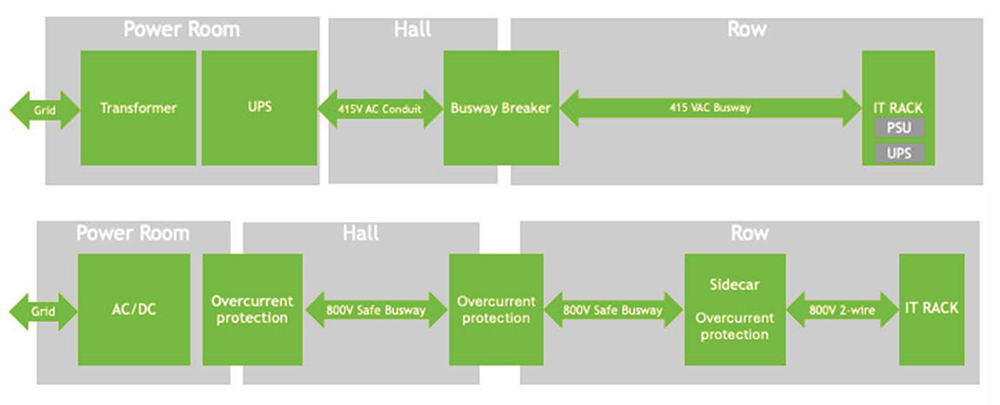

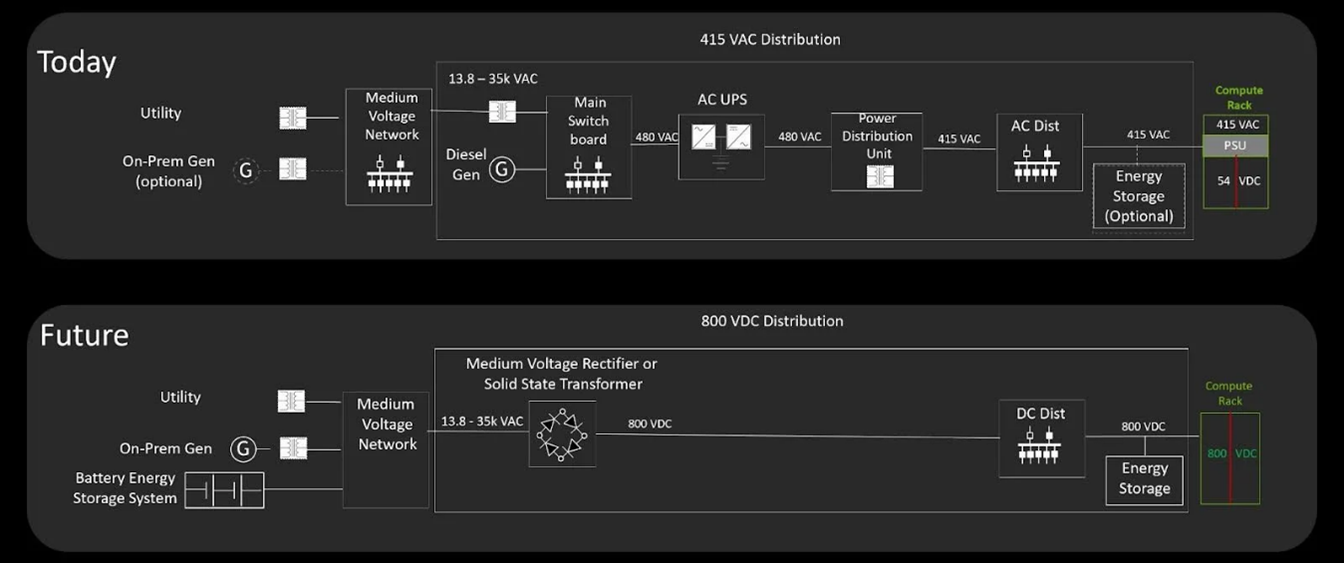

After years of liquid cooling dominating headlines as the defining innovation in data center design, power distribution has now taken center stage. Roadmaps point to accelerated compute racks exceeding 500 kW per cabinet, introducing new challenges for delivering power efficiently to AI clusters. NVIDIA’s proposed solution marks a decisive break from conventional 415/480 V AC layouts, moving toward a higher-voltage DC (800 Vdc) bus spanning the whitespace and fed directly from a single step‑down switchgear integrated with a solid‑state transformer connected to utility and microgrid systems.

This transition represents a major architectural shift, though it will unfold gradually. Hybrid deployments bridging existing AC systems with 800 Vdc designs are expected to dominate in the coming years. These transitional architectures will rely on familiar 415/480 Vac power distribution feeding whitespace sidecar units, which will step up and rectify voltage to 800 Vdc, in order to supply adjacent high‑performance racks.

Despite speculation that UPS systems, PDUs, power shelves, and BBUs may become obsolete, these interim designs will continue to sustain demand for such equipment for the foreseeable future. Until 2027, when Rubin Ultra chips are expected to reach the market, greater clarity around the end‑state architecture should emerge, and collaboration across the ecosystem will bring novel solutions to market. Significant progress is expected in the design and scalable manufacturing of solid‑state transformers (SSTs), DC breakers, on‑chip power conversion and other solutions enabling purpose‑built AI factories to fully capitalize on the efficiency of these new architectures.

Many of these technologies are already under development. ABB’s DC circuit breaker portfolio, while rooted in industrial applications, provides a solid foundation but must evolve to meet the needs of a new customer segment, alongside its solid‑state MV UPS offering. Vertiv and Schneider Electric—industry heavyweights whose announcements offered only high‑level previews of future solutions—are accelerating product development to address these evolving requirements and still have ample time to do so. Eaton stood out as one of the few vendors demonstrating a functional power sidecar unit at OCP, showcasing tangible progress in this emerging architecture and reinforcing its position through expertise in SSTs gained from the acquisition of Resilient Power.

While suppliers are expected to adapt swiftly to new demands, regulatory bodies responsible for guiding the design and safe operation of power solutions, such as the NFPA, often move at a slower pace than the market. Codes and standards will need to evolve accordingly, and uncertainty in this area could become a key obstacle to the broader adoption of cutting-edge higher-voltage designs.

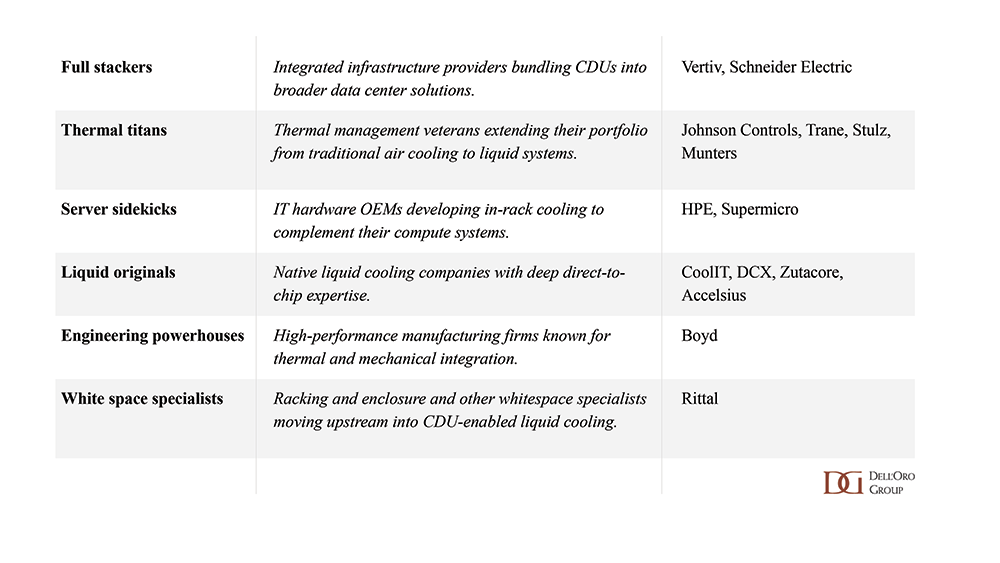

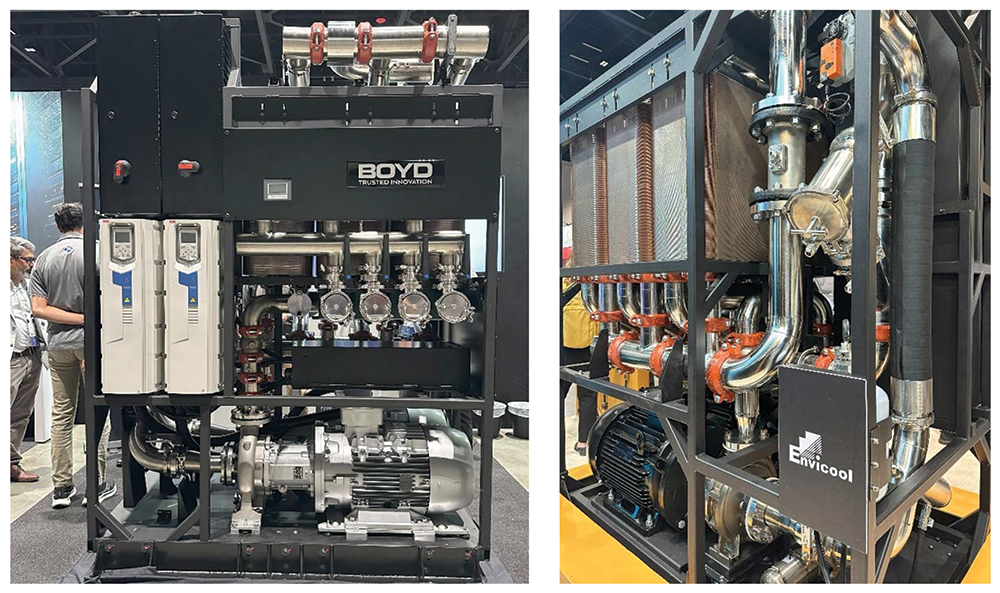

Although power has dominated recent discussions, liquid cooling sessions remained highly popular at OCP. I even found myself standing in a packed room for what I assumed would be a niche discussion on turbidity and electrical conductivity measurements in glycol fluids. Yet, the most significant development in this area was the introduction of the open‑standard Deschutes CDU. With the new specification expected to attract additional entrants to the market, our preliminary research—initially counting just over 40 CDU manufacturers—has quickly become outdated, with over 50 companies now in our mapping. However, new entrants continue facing the same challenges: while a CDU may appear to be just pipes, pumps, and filters, the true differentiation lies in system design expertise and intelligent controls—capabilities that remain difficult to replicate.

These trends underscore OCP’s growing role as the launchpad for the next generation of data center design, bringing breakthrough technologies to the forefront. This year’s discussions—from higher-voltage DC power to open liquid cooling—are shaping the blueprint for the next generation of AI factories. These architectures point toward a new model for hyperscale infrastructure, the result of collaboration among hyperscalers themselves, chipmakers, infrastructure specialists, and system integrators. Much remains in flux, with further developments expected leading into SC25 and NVIDIA GTC 2026. Stay tuned, and connect with us at Dell’Oro Group to explore our latest research or discuss these trends defining the data center of the future.