Artificial intelligence (AI) is currently having a profound impact on the data center industry. This impact can be attributed to OpenAI’s launch of ChatGPT in late 2022, which rapidly gained popularity for its remarkable ability to provide sophisticated and human-like responses to queries. As a result, generative AI, a subset of AI technology, became the focal point of discussions across industry events, earnings presentations, and vendor ecosystem discussions in the first half of 2023. The excitement is warranted, as generative AI has already caused tens of billions of dollars of investments, and is forecast to continue to lift Data Center Capex to over $500 Billion by 2027. However, due to the significant expansion of computing power required for training and deploying large language models (LLMs) that support generative AI applications, it will require architectural changes for data centers.

While the hardware required to support such AI applications is new to many, there is a segment of the data center industry that has already been deploying such infrastructure for years. This segment is often known as the high-performance computing (HPC) or supercomputing industry. Historically, this market segment has primarily been supported by governments and higher education to deploy some of the world’s most complex and sophisticated computer systems.

What generative AI is doing that is new is proliferating AI applications and the infrastructure to support them, to the much wider enterprise and service provider markets. Learning from the HPC industry gives us an idea of what that infrastructure may start to look like.

AI Infrastructure Needs More Power and Liquid Cooling

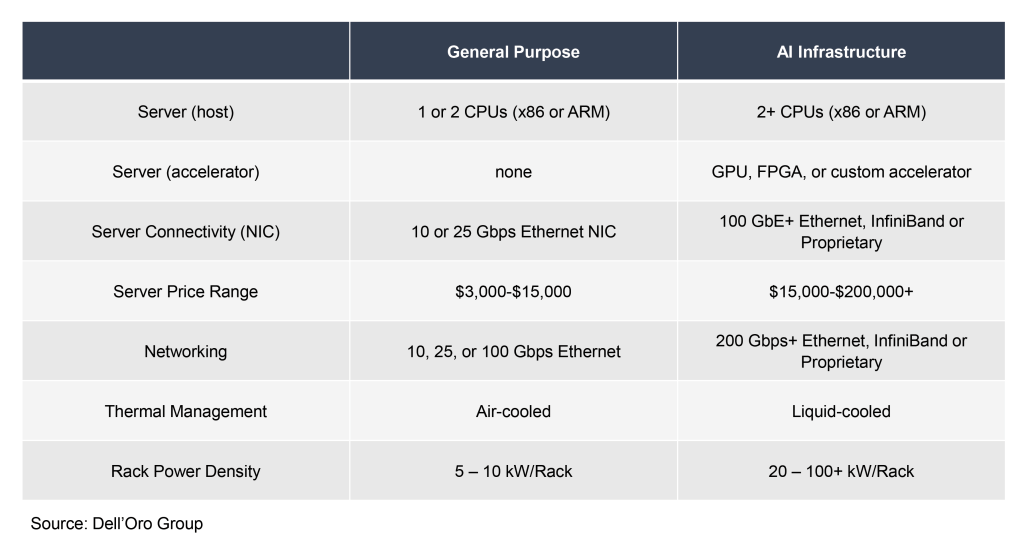

To summarize the implications shown in Figure 1, AI workloads will require more computing power and higher networking speeds. This will lead to higher rack power densities, which has significant implications for Data Center Physical Infrastructure (DCPI). For facility power infrastructure, also referred to as grey space, architectural changes are expected to be limited. AI workloads should increase demand for backup power (UPS) and power distribution to the IT rack (Cabinet PDU and Busway), but it won’t mandate any significant technology changes. Where AI Infrastructure will lead to a transformational impact on DCPI is in a data center’s white space.

First, due to the substantial power consumption of AI IT hardware, there is a need for higher power-rated rack PDUs. At these power ratings, the costs associated with potential failures or inefficiencies can be high. This is expected to push end users towards the adoption of intelligent rack PDUs, with the ability to remotely monitor and manage power consumption and environment factors. These rack PDUs cost many magnitudes higher than basic rack PDUs, which don’t give an end user the ability to monitor or manage their rack power distribution.

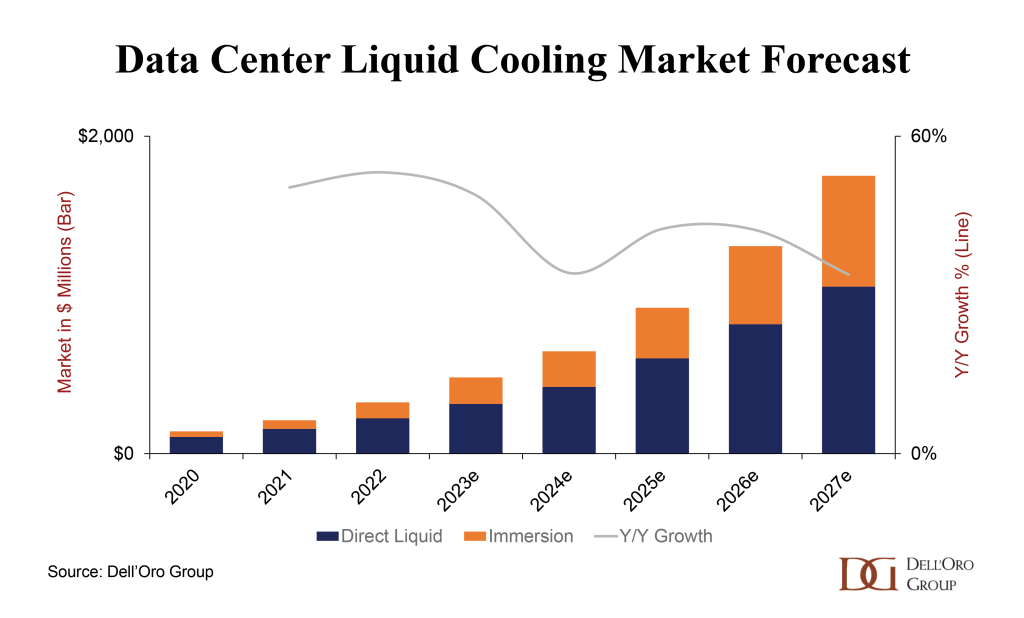

Even more transformative for data center architectures is the necessity of liquid cooling to manage higher heat loads produced by next-generation CPUs and GPUs to run AI workloads. Liquid cooling, both direct liquid cooling and immersion cooling, has been growing in adoption in the wider data center industry, which is expected to accelerate alongside the deployment of AI infrastructure. However, given the historically long runway associated with adopting liquid cooling, we anticipate that the influence of generative AI on liquid cooling will be limited in the near term. It remains possible to deploy the current generation of IT infrastructure with air-cooling but at the expense of hardware utilization and efficiency.

To address this challenge, some end-users are retrofitting their existing facilities with closed-loop air-assisted liquid cooling systems. Such infrastructure can be a version of a rear door heat exchanger (RDHx) or direct liquid cooling that utilizes a liquid to capture the heat generated within the rack or server, and reject it at the rear of the rack or server, directing it into a hot aisle. This design allows data center operators to leverage some advantages of liquid cooling without significant investments to redesign a facility. However, to achieve the desired efficiency of AI hardware at scale, purpose-built liquid-cooled facilities will be required. We expect the current interest in liquid cooling will start to materialize in deployments by 2025, with liquid cooling revenues forecast to approach $2 Billion by 2027.

Power Availability May Disrupt the AI Hype

Plans to incorporate AI workloads in future data center construction are already materializing. This was the primary reason for our recent upward revision to our Data Center Physical Infrastructure market 5-Year outlook, with revenue growth now forecast to grow at a 10% CAGR to 2027. But, despite all the prospective market growth AI workloads are expected to generate for the data center industry, there are some notable factors that could slow that growth. At the top of that list is power availability. The Covid-19 pandemic accelerated the pace of digitalization, spurring a wave of new data center construction. However, as that demand materialized, supply chains struggled to keep up, resulting in data center physical infrastructure lead times beyond a year at their peak. Now, as supply chain constraints are easing, DCPI vendors are working through elevated backlogs and starting to reduce lead time.

Yet, demand for AI workloads is forming another wave of growth for the data center industry. This double-shot of growth has generated a discrepancy between the growing energy needs of the data center industry and the pace at which utilities can supply power to the desired locations. Consequently, this is leading to data center service providers to explore a “Bring Your Own Power” model as a potential solution. While the feasibility of this model is still being determined, data center providers are thirsty for an innovative approach to support their long-term growth strategies, with the surge in AI Workloads being a central driver.

As the need for more DCPI is balanced against available power, one thing is clear — AI is ushering in a new era for DCPI. In this era, DCPI will not only play a critical role in enabling data center growth but will also define performance, cost and help achieve progress towards sustainability. This is a distinct shift from the historical role DCPI played, particularly compared to the industry nearly a decade ago when DCPI was almost an afterthought.

With this tidal wave of AI growth quickly approaching, it’s critical to address DCPI requirements within your AI strategy. Failing to do so might result in AI IT hardware with nowhere to get plugged in.