NVIDIA’s Vision for the Future of AI Data Centers: Scaling Beyond Limits

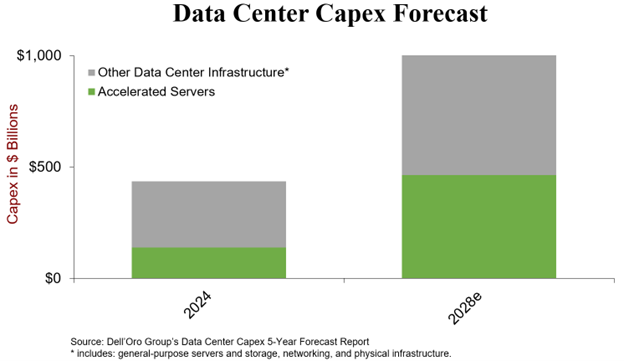

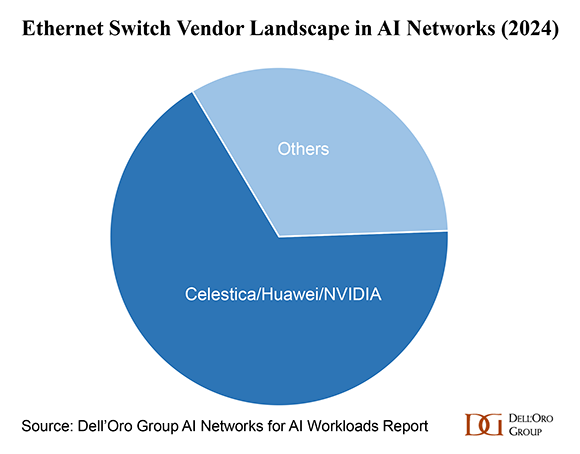

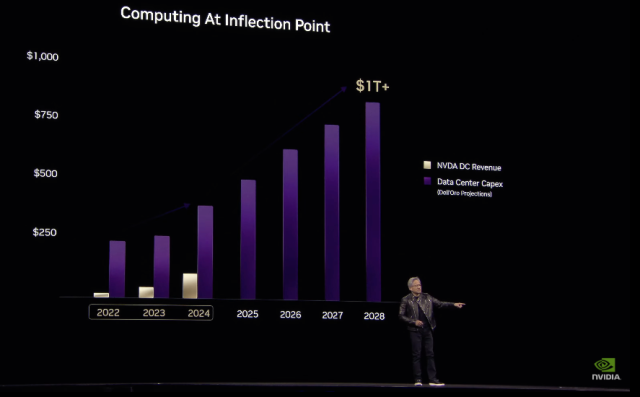

At NVIDIA GTC, Jensen Huang’s keynote highlighted NVIDIA’s growing presence in the data center market, which is projected to surpass $1 trillion by 2028, in reference to Dell’Oro Group’s forecast. NVIDIA is no longer just a chip vendor; it has evolved into a provider of fully integrated, rack-scale solutions that encompass compute, networking, and thermal management. During GTC, NVIDIA also announced an AI Data Platform that integrates enterprise storage with NVIDIA accelerated computing to enable AI agents to provide real-time business insights to enterprise customers. This transformation is redefining how AI workloads are deployed at scale.

The Blackwell Platform: Optimized for AI Training and Reasoning

The emergence of NVIDIA’s Blackwell platform represents a major leap in AI acceleration. Not only does it excel at training deep learning models, but it is also optimized for inference and reasoning—two key drivers of hyperscale capital expenditure growth in 2025. Reasoning models, which generate a significant number of tokens, operate differently from conventional AI models. Unlike traditional AI that directly answers queries, reasoning models use “thinking tokens” to process and refine their responses, mimicking cognitive reasoning. This process significantly increases computational demands significantly.

The Evolution of Accelerated Computing

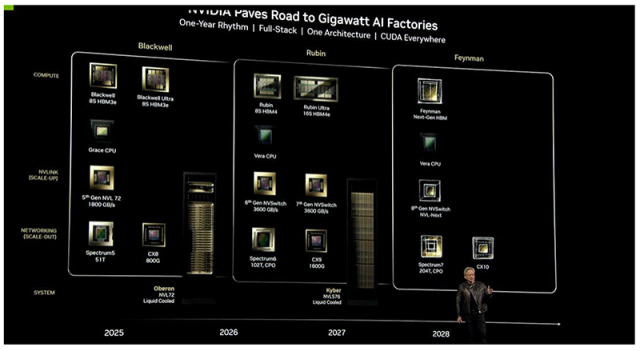

The unit of accelerated computing is evolving rapidly. It started with single accelerators, progressed to integrated servers like the NVIDIA DGX, and has now reached rack-scale solutions like the NVIDIA GB200 NVL72. Looking ahead, NVIDIA aims to scale even further with the upcoming Vera Rubin Ultra platform, featuring 572 GPUs interconnected in a rack. Scaling up AI clusters introduces new challenges in interconnects and power density. However, as compute nodes scale into the hundreds of thousands (and beyond), the industry needs to address several key challenge:

1) Increasing Rack Density

AI data centers aim to pack GPUs as closely as possible to create a coherent compute fabric for large language model (LLM) training and real-time inference. The NVL72 already features extremely high density, necessitating liquid cooling for heat dissipation. With further scaling, interconnect distances will increase. The question arises: will copper cabling remain viable, or will the industry need to transition to optical interconnects, despite their higher cost and power inefficiencies?

2)The Shift to Multi-Die GPUs

To boost computational capacity, increasing GPU die size has been one approach. However, with the Vera Rubin platform, GPUs have already reached the reticle limit, necessitating a shift to multi-die architectures. This will increase the physical footprint and interconnect distance, posing further engineering challenges.

3) Surging Rack Power Density

As GPU size and node count increase, rack power density is skyrocketing. NVIDIA’s GB200 NVL72 racks already consume 132 kW, and the upcoming Rubin Ultra NVL572 is projected to require 600 kW per rack. Given that AI data centers typically operate within a 50 MW range, fewer than 100 racks can be housed in a single facility. This constraint demands a new approach to scaling AI infrastructure.

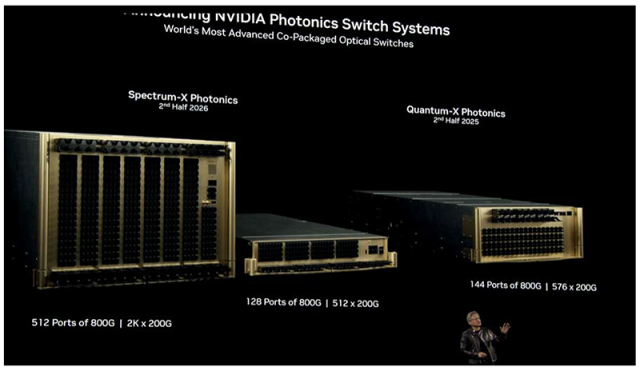

4)Disaggregating AI Compute Across Data Centers

As power limitations become a bottleneck, AI clusters may need to be strategically distributed across multiple data centers based on power availability. This introduces the challenge of interconnecting these geographically dispersed clusters into a single virtual AI compute fabric. Coherent optics and photonics-based networking may be necessary to enable low-latency interconnects between data centers separated by miles. NVIDIA’s recently introduced silicon photonics switch may be part of this solution, at least from the standpoint of lowering power consumption, but additional innovations in data center interconnect architectures will likely be required to meet the demands of large-scale distributed AI workloads.

The Future of AI Data Centers

As NVIDIA continues to innovate, the next generation of AI data centers will need to embrace new networking technologies, reimagine power distribution, and pioneer novel solutions for high-density, high-performance computing. The future of AI isn’t just about more GPUs—it’s about building the infrastructure to support them at scale.