The 3rd AI Hardware Summit took place virtually earlier this month and it was exciting to see how quickly the ecosystem has evolved and to learn of the challenges the industry has to solve in scaling artificial intelligence (AI) infrastructure. I would like to share highlights of the Summit, along with other notable observations from the industry in the area of accelerated computing.

The proliferation of AI has emerged as a disruptive force, enhancing applications such as image and speech recognition, security, real-time text translation, autonomous driving, and predictive analytics. AI is driving the need for specialized solutions at the chip and system level in the form of accelerated compute servers optimized for training and inference workloads at the data center and the edge.

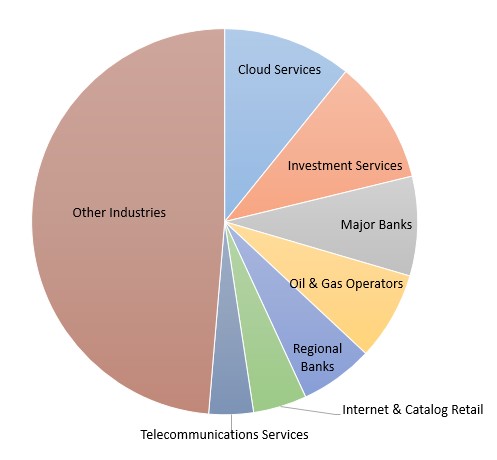

The Tier 1 Cloud service providers in the US and China lead the way in the deployment of these accelerated compute servers. While the deployment of these accelerated compute servers still occupies a fraction of the Cloud service providers’ overall server footprint, this market is projected to grow at a double-digit compound annual growth rate over the next five years. Most accelerated server platforms shipped today are based on GPUs and FPGAs from Intel, Nvidia, and Xilinx, the number of new entrants, especially for the edge AI market, is growing.

However, these Cloud service providers, or enterprises deploying AI applications, simply cannot increase the number of these accelerated compute servers without addressing bottlenecks at the system and data center level. I have identified some notable technology developments that need to be addressed to advance the proliferation of AI:

-

- Rack Architecture: We have observed a trend of these accelerated processors shifting from a distributed model (i.e., one GPU in each server), to a centralized model consisting of an accelerated compute server with multiple GPUs or accelerated processors. These accelerated compute servers have demanding thermal dissipation requirements, oftentimes requiring unique solutions in form-factor, power distribution, and cooling. Some of these systems are liquid-cooled at the chip level, as we have seen with the Google TPU, while more innovative solutions such as liquid immersion cooling of entire systems are being explored. As these accelerated compute servers are becoming more centralized, resources are pooled and shared among many users through virtualization. NVIDIA’s recently launched A100 Ampere takes virtualizing to the next step with the ability to allow up to seven GPU instances with a single A100 GPU.

- CPU: The GPU and other accelerated processors are complementary and are not intended to replace the CPU for AI applications. The CPU can be viewed as the taskmaster of the entire system, managing a wide range of general-purpose computing tasks, with the GPU and other accelerated processors performing a narrower range of more specialized tasks. The number of CPUs also needs to be balanced with the number of GPUs in the system; adequate CPU cycles are needed to run the AI application, while sufficient GPU cores are needed to parallel process large training models. Successive CPU platform refreshes, either from Intel or AMD, are better optimized with processing inference frameworks and libraries, and support higher I/O bandwidth within and out of the server.

- Memory: My favorite session from the AI Hardware Summit was the panel discussion on memory and interconnects. During that session, experts from Google, Marvell, and Rambus shared their views on how memory performance can limit the scaling of large AI training models. Apparently, the abundance of data that needs to be processed in memory for large training models on these accelerated compute servers is demanding greater amounts of memory. More memory capacity means more modules and interfaces, which ultimately degrades chip-to-chip latencies. One proposed solution that was put forth is the use of 3D stacking to package chips closer together. High Bandwidth Memory (HBM) also helps to minimize the trade-off between memory bandwidth and capacity, but at a premium cost. Ultimately, the panel agreed that there needs to be an optimal balance between memory bandwidth and capacity within the system, while adequately addressing thermal dissipation challenges.

- Network Connectivity: As these accelerated compute nodes become more centralized, a high-speed fabric is needed to ensure the flow of huge amounts of unstructured AI data over the network to accelerated compute servers for in-memory processing and training. These connections can be server-to-server as part of a large cluster, using NVIDIA’s NVlink and InfiniBand (which NVIDIA acquired with Mellanox). Ethernet, now available up to 400 Gbps, is an ideal choice for connecting storage and compute nodes within the network fabric. I believe that these accelerated compute servers will be the most bandwidth-hungry nodes within the data center, and will drive the implementation of next-generation Ethernet. Innovations, such as Smart NICs, could also be used to minimize packet loss, optimize network traffic for AI workloads, and enable the scaling of storage devices within the network using NVMe over Fabrics

I anticipate that specialized solutions in the form of accelerated computing servers will scale with the increasing demands of AI, and will comprise a growing portion of the data center capital expenditures. Data centers could benefit from the deployment of accelerated computing, and would be able to process AI workloads more efficiently with fewer, but more powerful and denser accelerated servers. For more insights and information on technology drivers shaping the server and data center infrastructure market, take a look at our Data Center Capex report.